Dovecot + Maildir + Ubuntu 14.04 LTS Upgrade

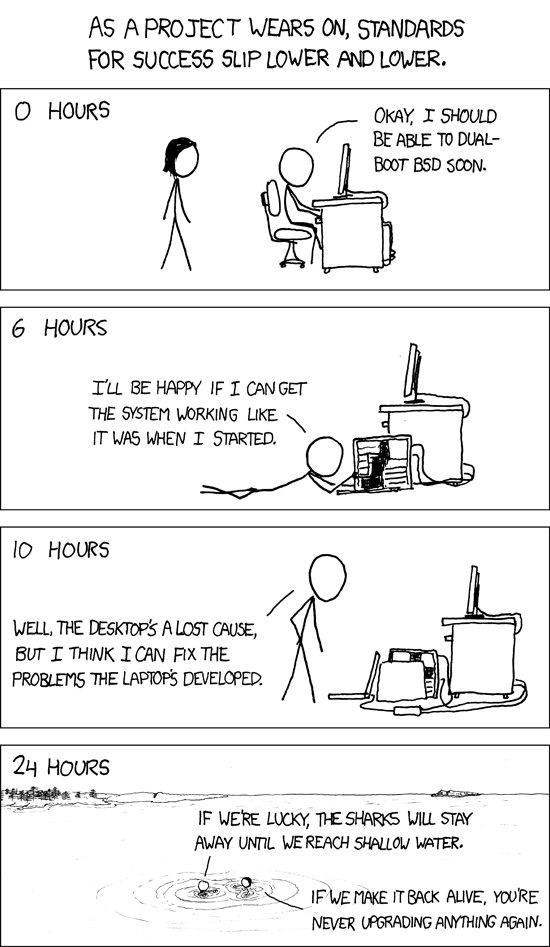

I recently upgraded the server behind this site to Ubuntu 14.04 LTS from 12.04 LTS (only about a year late!)

A few things went awry (the PHP install couldn’t talk to MySQL, for example), but a reboot cleared that right now. However, one piece remained broken. Mail wasn’t properly being delivered.

I’m don’t use this server for mail much myself, but some people (like my brother) do. It was broken, and I had no idea why. It didn’t help that the last time I touched the config was over a year ago. It also doesn’t help that mail server setup is basically a dark art. Regardless, after a few days of poking at it and “hoping the problem would go away”, I decided to go at it again today. Hopefully by writing this down I’ll remember a bit more about my setup, but if not, at least I’ll have this handy reference when I forget it again.

The way mail is set up on this server is that Postfix listens for incoming SMTP traffic, which it then forwards to Dovecot for delivery. Dovecot is set up to use the Maildir format, but instead of storing the maildirs in users’ home directories, it stores them in /var/mail/ since not all the users even have home directories. It made sense at the time, and I think it still makes sense now!

At any rate, with Dovecot’s upgrade came a problem, and after digging around, I saw in the logs that Dovecot was unable to deliver mail to /home/

It turns out that Dovecot’s Ubuntu distro added a new configuration file, /etc/dovecot/conf.d/99-mail-stack-delivery.conf, which set the mail_location back to /var/mail/

Now, I don’t know if this means it’s fixed; my brother will have to let me know. Regardless, it’s at least less broken now.